Opportunity and responsibility in the era of the Intelligent Cloud and Intelligent Edge

“The most profound technologies are those that disappear. They weave themselves into the fabric of everyday life until they are indistinguishable from it.”

Nearly three decades ago, Mark Weiser, then the chief scientist at Xerox PARC, wrote this as part of an influential paper in which he predicted a future in which computing would soon be omnipresent.

That world is here. Computing is not just an interface that you go to, but it's instead embedded everywhere — in places, things, our homes and cities. The world is a computer.

Today at Microsoft Build in Seattle, I shared our vision and roadmap for how developers can lead in this new era.

Every part of our life, our homes, our cars, our workplaces, our factories and cities — every sector of our economy, whether it's precision agriculture, precision medicine, autonomous cars or autonomous drones, personalized medicine or personalized banking — are all being transformed by digital technology.

This represents an incredible amount of data, but also a broader opportunity to create new, rich computing experiences. And if you think of the world as a computer, developers are a new seat of power in this digitally connected world.

Yet, with this opportunity comes great responsibility. Hans Jonas, one of the pioneers of biomedical ethics, wrote, “Act so that the effects of your action are compatible with the permanence of genuine human life.” These words are relevant to us today. We must ensure technology’s benefits reach people more broadly across society and that the technologies we create are trusted by the individuals and organizations that use them.

We are working to instill this trust in three key areas. The first is privacy. We believe privacy is a fundamental human right, which is why we are working hard to ensure compliance with GDPR and have litigated all the way to the Supreme Court to protect the legal rights of our customers.

The second is cybersecurity. We need to act with collective responsibility to help keep the world safe. We have led bold new cross-industry initiatives, like the Cybersecurity Tech Accord, and have called on governments to do more, through initiatives such as a Digital Geneva Convention.

And, finally, as we make advancements in AI, it’s critical that we take the steps needed to ensure that AI works in an ethical and responsible way. We need to ask ourselves, not only what can computers do, but what should they do? We are therefore working to ensure we translate our industry-leading research in this area into broadly implementable tools and guidance that everyone can leverage.

This opportunity and responsibility is what is behind Microsoft’s mission when we say we want to empower every person and every organization on the planet to achieve more. Technology paradigms come and go, but our mission endures.

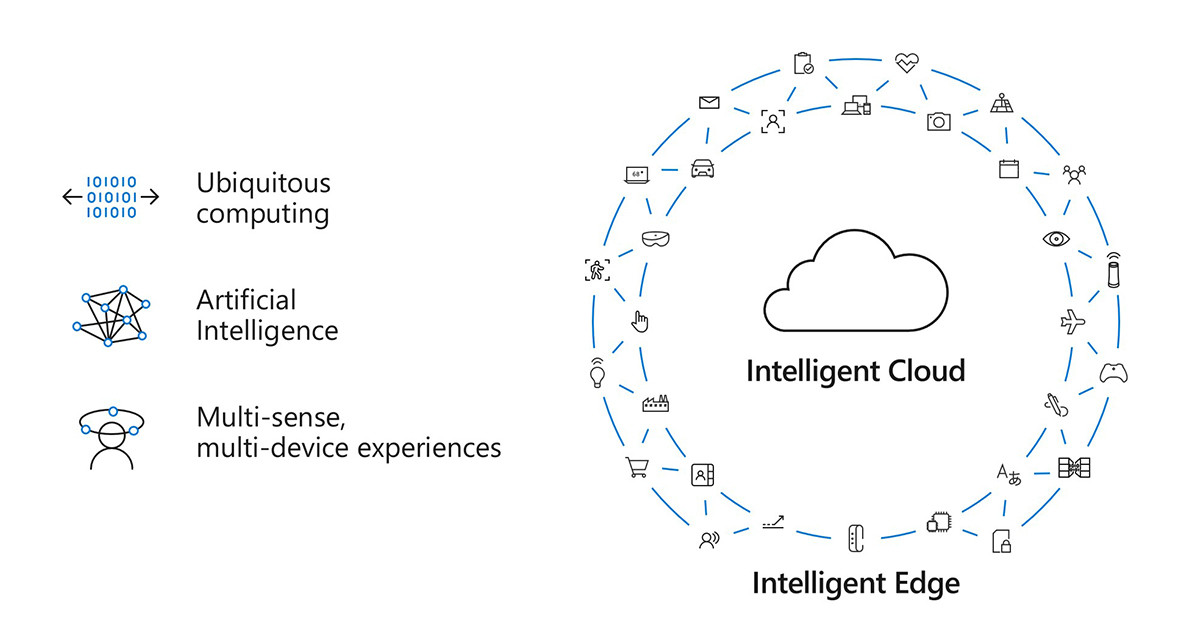

The era of the intelligent cloud and intelligent edge is upon us. This morning, I shared how this will be driven by advances in ubiquitous computing, artificial intelligence, and multi-sense, multi-device experiences.

First, Ubiquitous Computing. Azure is being built as the world’s computer. We deliver cloud services from 50 regions around the planet, more than any other cloud provider and have the broadest set of compliance certifications in the industry. And we’re seeing tremendous momentum today with brands across all industries, including financial services, retail, automotive, and consumer packaged goods, using Azure at scale.

We shipped 650 new capabilities in Azure over the last year and are introducing 70 at Build alone. Azure Stack is an extension of Azure and enables a truly consistent hybrid cloud platform, including the ability to decide where applications and workloads should reside. Azure IoT Edge allows devices on the edge to act on their own and connect only when needed. And last month, we announced Azure Sphere, a new solution to secure the 9 billion microcontroller-powered devices that are built and deployed every year, completing the ubiquitous computing fabric.

Today we went even further, announcing that we are open sourcing the Azure IoT Runtime so that developers can modify, debug and have more transparency and control over their edge applications. Together with Qualcomm, we are also creating a vision AI developer kit running Azure IoT Edge to enable new camera-based Internet of Things (IoT) solutions. And, with DJI, the world’s biggest drone company, we are creating a new SDK for Windows 10 PCs to bring full flight control and real-time data transfer capabilities to Windows 10 devices.

But it’s not about celebrating our breakthroughs; it’s about translating these into tools and frameworks so that every developer can be an AI developer.

With over 30 cognitive APIs we enable scenarios such as text to speech, speech to text, and speech recognition, and our Cognitive Services are the only AI services that let you custom-train these AI capabilities across all your scenarios.

To amplify this, today we announced a speech devices SDK and reference kit to deliver superior audio processing from multi-channel sources for more accurate speech recognition, including noise cancellation and far-field voice. With Cognitive Services, you can custom train a model with audio recordings to achieve maximum accuracy in noisy environments. For example, Samjoy, a startup focused on travel guides and assistance, is working with Xiaomi to provide a Chinese-English translation device that you can use naturally in a conversation, removing the language barrier.

We also introduced Project Kinect for Azure — a powerful sensor kit with spatial, human and object understanding to enable new scenarios across security, health and manufacturing. When we shipped Kinect 8 years ago, it was the first AI device with speech, gaze, and vision, and we’ve subsequently made a tremendous amount of progress with these technologies as part of HoloLens. Project Kinect for Azure will bring together some of the best spatial understanding, skeletal tracking, and object recognition, with minimal depth noise, and an ultra-wide filed of view, enabling new experiences across robotics, automation, and holoportation.

And, we’re driving new advances in our underlying cloud infrastructure so that developers can build next-generation AI applications on our cloud. We’re building the world’s first AI super computer in Azure. We announced a preview of Project Brainwave, an architecture for deep neural net processing, which will make Azure the fastest cloud for AI, with true real-time AI. Brainwave is over five times faster than TPU per inference.

In the industrial space, manufacturer solutions provider Jabil is already working with us to use these advances to improve quality assurance:

A third core technology driving the future of the intelligent cloud and intelligent edge is the transition from putting the device at the center to putting people at the center, which I describe as multi-sense, multi-devices experiences. Microsoft 365 is the coming together of Windows and Office, built for multi-sense, multi-device experiences and puts people at the center for work and life. If you’re a Windows or an Office developer, you are already a Microsoft 365 developer.

Think of what you do today. You get up in the morning, use an app like Outlook Mobile to check e-mail. In the car, you might connect to a conference call through the Skype app on the dashboard. At the office, you use Word to co-edit with people across the country; in a conference room, you collaborate with a large display.

Multiple devices, interfaced in multiple locations, with multiple people using multiple senses. You can build these experiences with Microsoft 365.

Cortana is a quintessential multi-device and multi-sense experience. Built into Windows and available on phones, Cortana is being integrated with automobiles, applications like Outlook and Teams, and now, has the capability to converse with other digital agents. We’ve partnered with our friends at Amazon to imagine what it means for two personal agents to better assist you while helping each other:

But we don’t just stop at the digital world. It’s no longer just digital spaces we’re able to reason over with intelligence, we are able to reason over the physical world as well. We’re seeing how advances in mixed reality can empower the more than 2 billion firstline workers, who account for 80 percent of the world’s workforce. We introduced Microsoft Remote Assist, which allows firstline workers to access an expert where and when they need them and share what they’re seeing, as well as Microsoft Layout, which lets teams share and edit designs in real time:

As computing becomes woven in to the fabric of our lives, the opportunities are endless. But it’s not just about adding more screens or building algorithms that hijack our attention. It’s about helping people focus on what matters most to them.

Even more importantly, it’s about empowering everyone to fully participate in our society and our economy using technology. This includes the more than 1 billion people around the world with disabilities.

Accessibility is something that I’m passionate about, and in the past few years it has been a real privilege for me to watch a group of passionate people across Microsoft tackle tough challenges and succeed with amazing advances to help people with disabilities.

Microsoft today announced AI for Accessibility, an initiative to provide grants and support to research organizations, NGOs, as well as entrepreneurs, to bring their passion and enthusiasm to help people across the globe with disabilities fully participate in our society and our economy.

Source:Linked in

- 上海工商

- 上海网警

上海工商 - 网络社会

征 信 网 - 沪ICP备

06014198号-9 - 涉外调查

许可证04870